Astronomy appears to be in peril, since it is increasingly inhabited by scholars who seem more interested in terrestrial politics than celestial objects, and who perceive the pursuit of natural facts as threatening at times.

This became obvious in recent years, once the proposed Thirty Meter Telescope (TMT) project in Hawaii was being blocked by Indigenous protestors, who view the mountain it is to be built on as sacred. With a resolution 12 times finer than the Hubble space telescope, the TMT could offer abundant new observational opportunities in astronomy and astrophysics. But a protest in support of the Indigenous groups by advocates in the astronomy community now means that it is an open question as to whether the TMT will ever be built.

Mauna Kea is sacred to Native Hawaiian people. The Hawaiians who have been protesting construction of the Thirty Meter Telescope are trying to protect a sacred site from further desecration. I stand in solidarity with them. #TMT https://t.co/uInzGrrQp6

— Elizabeth Warren (@ewarren) July 23, 2019

Last week yielded another ominous sign of the times, as eminent astronomer John Kormendy retracted an article intended for publication in the Proceedings of the National Academy of Sciences from a preprint website. His article focused on statistical results relating to the evaluation of the “future impact” of astronomers’ research as a means to “inform decisions on resource allocation such as job hires and tenure decisions.” Online critics attacked Kormendy’s use of quantitative metrics, which may be seen as casting doubt on the application of diversity criteria in personnel decisions, at which point Kormendy felt the need to release an abject apology (more on this below).

Of fact, statistical assessments of real-world human data are always vulnerable to systemic biases that unjustly distort the purported outcomes. And I would never claim that Kormendy's work is without flaws. Different scientists provide alternate hypotheses and study other data sets to hunt for any errors in the original research, as is the conventional scientific method of participating in such critique. That is the proper way to conduct science. Those who say in advance, without additional analysis or evidence, that someone else's study conclusions are "damaging" or menacing, without questioning their correctness, might think about changing careers.

For over 35 years, I've taught astronomy (as well as physics) at a number of research institutes across three continents. However, I would not consider myself an astronomer. My educational background is in theoretical particle physics, and my professional forays into astrophysics and cosmology stem from a long-standing interest in observing scientific phenomena from a variety of disciplinary perspectives, including astronomy, as a means of putting fundamental notions about nature to the test.

Nonetheless, during the course of my career, I've worked with a huge number of astronomers and consulted and learned from a far greater number. So I'm concerned because I'm familiar enough with the profession's social and professional dynamics.

John Kormendy is one of the astronomers whose work I've been aware of for decades, and whose research mirrors my interest in dark matter and the development of the universe's structure. While visiting the Dominion Astrophysics Observatory in Victoria, Canada, I did have a brief encounter with him.

That was a long time ago. However, when I checked in with a colleague recently to see how Kormendy's reputation had fared in the interim, I was informed that he is still considered one of the "world's leading researchers on the creation and structure of galaxies." He is a member of the National Academy of Sciences and the recipient of various prizes in his area. His research has been referenced by other astronomers over 33,000 times.

Kormendy has been interested for some time in metrics that scientists can use to ensure that their assessment of potential hires and promotions are less subjective. As with all areas in which decisions depend on human perceptions, there is no methodology that is universally guaranteed to work. Though I personally wouldn’t spend my own research time exploring this area, I appreciate that there are those willing to try to investigate it systematically, in spite of the many obvious obstacles.

Following five years of accumulating data, and consulting colleagues across the globe, Kormendy produced a book on the subject, published in August by the Astronomical Society of the Pacific, entitled Metrics of Research Impact in Astronomy, as well as the related (and now retracted) paper submitted to the Proceedings of the National Academy of Sciences (PNAS) on November 1st under the title ‘Metrics of research impact in astronomy: Predicting later impact from metrics measured 10-15 years after the PhD.’

Kormendy began his paper cautiously, recognizing that his emphasis on applying quantitative metrics to human-resource evaluation would be viewed with skepticism by those who claim that such metrics embed systemic biases, and that their use presents obstacles to inclusion. Notwithstanding such anticipated concerns, he argued that

we have to judge the impact that [a] candidate’s research has had or may yet have on the history of his or her subject. Then metrics such as counts of papers published and citations of those papers are often used. But we are uncertain enough about what these metrics measure so that arguments about their interpretation are common. Confidence is low. This can persuade institutions to abandon reliance on metrics. [But] we would never dare to do scientific research with the lack of rigor that is common in career-related decisions. As scientists, we should aim to do better.”

He makes it clear up front that quantitative metrics cannot tell us everything we need to know about a candidate. In the “Significance Statement” provided on the first page, he states

This paper develops machinery to make quantitative predictions of future scientific impact from metrics measured immediately after the ramp-up period that follows the PhD. The aim is to resolve some of the uncertainty in using metrics for one aspect only of career decisions—judging scientific impact. Of course, those decisions should be made more holistically, taking into account additional factors that this paper does not measure (my emphasis).

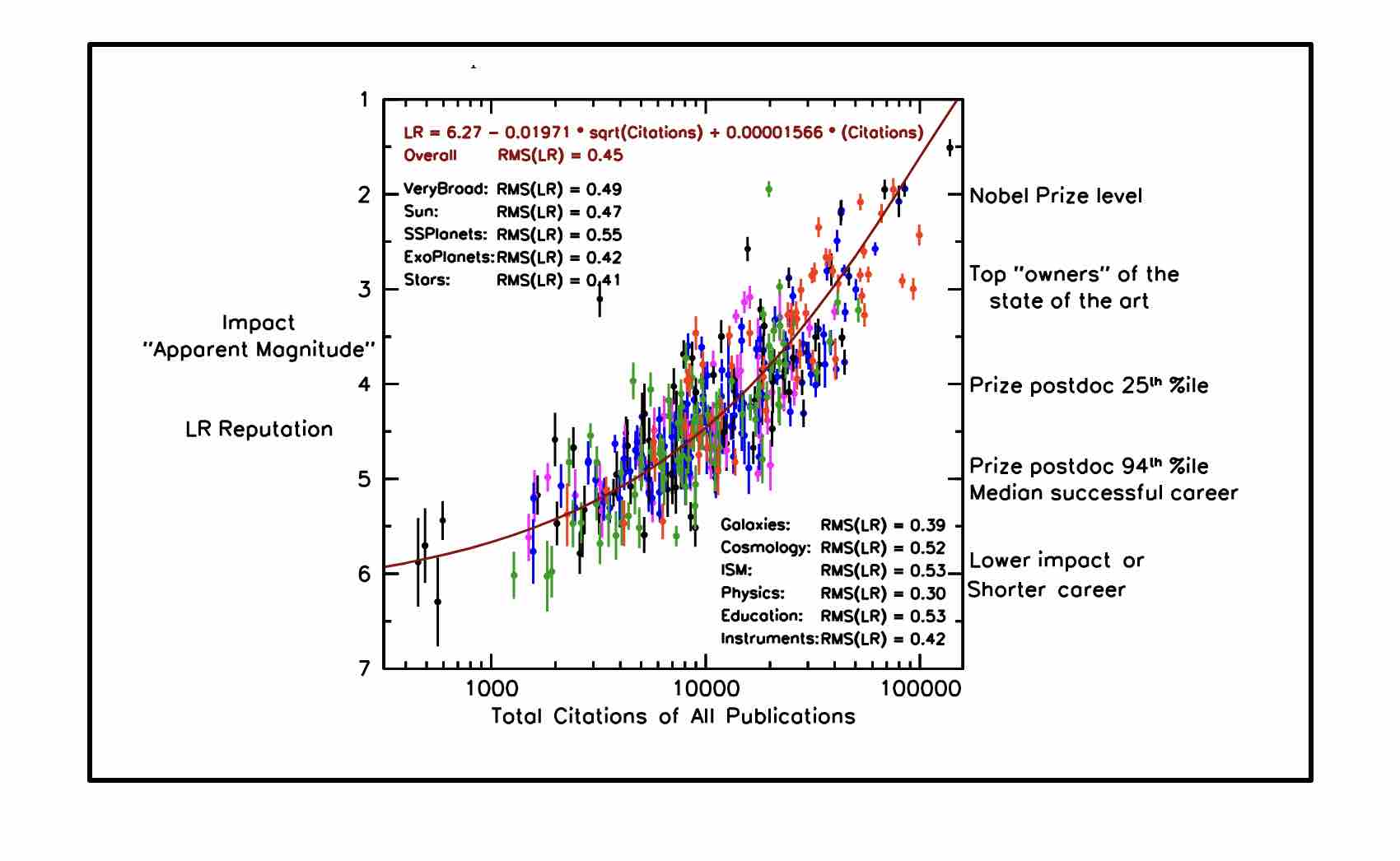

The bulk of the paper focuses on three out of 10 metrics—citations of refereed papers, citations normalized by numbers of coauthors, and first-author citations—which Kormendy attempts to develop into a prediction machine, correlating the metrics evaluated over the early part of a researcher’s career with their later “impact.”

This latter index was constructed by asking 22 scientists who are well-known in their respective subfields to evaluate the impact of 512 astronomers from 17 major research universities around the world whose other early-career metrics could be correlated with those evaluations. Specifically, Kormendy sought to determine whether such evaluation of individuals’ “impact” 10 to 15 years after they’d received their PhDs correlated, in a significant way, with the metrics evaluated at that time and to those corresponding to these scholars during the early period following their PhD (in other words, whether the metrics could predict the evaluations rendered by the advisory panel). The paper claimed to demonstrate, not surprisingly, that averaging the three different metrics produces, on average, a better predictive estimate than any of the metrics do separately.

One can question many aspects of this model, including the significance of its conclusions. That early-career citation counts correlate with later impact may seem almost tautological. (Why would you not expect that having a large number of citations early on in one’s career would be correlated with attaining a reputation as a high-impact scholar later on?) Also, the proposition that averaging several metrics produces a better predictive fit than does any individual metric in isolation would only really be noteworthy if it turned out not to be true.

Finally, one can always question the subjective assessments of those 22 designated sages tasked with measuring “impact,” especially since their assessments (and Kormendy’s own decisions in regard to who performs this task) may reflect the same kind of subjectivity that Kormendy’s whole project is designed to avoid.

I am not sure Kormendy understood the can of worms he was opening. But the response from the astronomy Twittersphere was swift. One could have anticipated the arguments in advance if one were familiar with the standard concerns of those who tend to view any quantitative metrics applied to assessment (including standardized test results) as being inherently suspect at best, or sexist and racist at worst. Kormendy further tempted fate by focusing only on subjects from well-known schools, and by recruiting mostly well-known male senior scientists as members of his expert “impact” panel.

As it happened, those who rained criticism on Kormendy didn’t just limit themselves to these generalities. It was also specifically claimed that junior researchers who might read Kormendy’s paper would feel threatened, or that their careers might be negatively impacted by selection committees whose members were now further encouraged to be systematically biased against them.

Nevertheless, even imperfect quantitative metrics can improve on qualitative assessments made in the absence of such metrics. And it is quite true that Kormendy’s analysis, if applied as a means to recruit or promote, would expose, for better or worse, those whose metrics are low. There may be lots of reasons for such low scores, including bias. But low scores can also mean that the evaluated researchers are simply not productive or impactful. Either way, it exposes potential problems (either with the candidate or his or her academic environment) that could be addressed. Moreover, as much as one might dislike quantitative—or “objective”—merit-based metrics, the alternatives have, historically, usually been worse—and include nepotism and cronyism.

Yet by the standards of modern cancel culture, the online barrage of criticism against Kormendy did not seem especially ferocious. Unlike other furors, this one did not feature virally circulated demands for his sacking or other forms of cancellation. But surely there must have been some other pressure coming to bear on Kormendy, because he not only retracted his published paper and put further publication of his book on hold, but he also posted an apology whose language seemed out of all proportion to his actions:

I apologize most humbly and sincerely for the stress that I have caused with the PNAS preprint, the PNAS paper, and my book on using metrics of research impact to help to inform decisions on career advancement. My goal was entirely supportive. I wanted to promote fairness and concreteness in judgments that now are based uncomfortably on personal opinion. I wanted to contribute to a climate that favors good science and good citizenship. My work was intended to be helpful, not harmful. It was intended to decrease bias and to improve fairness. It was hoped to favor inclusivity. It was especially intended to help us all to do the best science that we can … But intentions do not, in the end, matter. What matters is what my actions achieve. And I now see that my work has hurt people. I apologize to you all for the stress and the pain that I have caused. Nothing could be further from my hopes. The PNAS paper and … preprint have been withdrawn as thoroughly as the publication system allows. The … withdrawal—if accepted by them—should be in the Wednesday posting … I fully support all efforts to promote fairness, inclusivity, and a nurturing environment for all. Only in such an environment can people and creativity thrive.

It is hard to know what specifically induced this kind of Maoist mea culpa. But Kormendy (or someone with authority over him) presumably was swayed by the online tempest. And an unfortunate effect will be that anyone observing how this played out will be warned off making their own inquiries in this field, for fear that they will meet the same fate. This is one reason why scientific articles should never be retracted simply because they might cause offense. Truth can hurt, but too bad.

What makes this example particularly sad is that Kormendy’s intent was clearly to stimulate healthy discussion and improve fairness—notwithstanding the fact that the mobs claimed (and, if his apology is to be taken at face value, convinced him) that he was doing exactly the opposite. In his lengthy apology, he writes that “intentions do not, in the end, matter.” But of course, they matter. And in this case, not only were Kormendy’s intentions benign, but his original paper actually addressed (and even echoed) many of the critiques he later got:

I emphasize that the goal of … this paper is to estimate impact accrued, not impact deserved. Historically, some people who made major contributions were, at the time, undervalued by the astronomical community. I hope that this work will help to make people more aware of the dangers of biased judgments and more focused on giving fair credit. How to make judgment and attribution more fair is very important. But it is not directly the subject of this work…

My goal has been to lend a little of the analysis rigor that we use when we do research to the difficult and subjective process of judging research careers. But I do not suggest that we base decisions only on metrics. Judgments—especially decisions about hiring and tenure—should be and are made more holistically, weighing factors that metrics do not measure. For faculty jobs, these include teaching ability, good departmental citizenship, collegiality, and the “impedance match” between a person’s research interests and the resources that are available at that institute … Also, many factors other than research have, in the 2020s, become deservedly prominent in resource decisions. Heightened awareness of the importance of inclusivity has the result that institutions put special emphasis on redressing historically underrepresented cohorts. Urgent concerns are gender balance and the balance of ethnic minorities. How, relatively, to weight research impact and these concerns are issues that each institution must decide for itself … My job is restricted to one aspect only of career decisions—the judgment of research impact as it has already happened and as it can, with due regard for statistical uncertainties and outliers, be predicted to happen in future.

I emphasize again that metrics measure the impact that happens, not the impact that should happen. It helps us to understand what happens in the real world. The real world is the only one that we have to live in. My hope is that a healthy—but not excessive—investment in impact measures will make a modest contribution to better science.

Unfortunately for Kormendy, the "real world" is also a place where victimization and inequity have come to dominate many academic discussions, to the point where making a modest contribution to better science can be attacked—and, in this case, literally expunged—by those who believe that quantitative exploration of certain data sets can be harmful or threatening.